EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks

TLDR

Basically, instead of just increasing either the number of nuerons, the depth of a neural network, or the resolution of image input sizes, increase all three in tandem to achieve more efficient per-parameter accuracy gains. Seems unsurprising. They used a simple width / depth / resolution formula to find scaling levels that worked well.

Summary

EfficientNet is a family of convolutional neural networks introduced by Mingxing Tan and Quoc V. Le in a paper published in 2019. Their research focused on a systematic approach to model scaling, introducing a new scaling method that uniformly scales all dimensions of the network {width, depth, and resolution} with a fixed set of scaling coefficients.

Previously, when researchers aimed to create a larger model, they often scaled the model's depth {number of layers}, width {number of neurons in a layer}, or resolution {input image size}. However, these methods usually improved performance up to a point, after which they would see diminishing returns.

The EfficientNet paper argued that rather than arbitrarily choosing one scaling dimension, it is better to scale all three dimensions together in a balanced way. They proposed a new compound scaling method that uses a simple yet effective compound coefficient ( \phi ) to scale up CNNs in a more structured manner.

The fundamental idea behind compound scaling is that if the input image is (s) times larger, the network needs more layers to capture more fine-grained patterns {depth}, but also needs more channels to capture more diverse patterns {width}. So, the depth, width, and resolution can be scaled up uniformly by a constant ratio.

The authors used a small baseline network (EfficientNet-B0), then scaled it up to obtain EfficientNet-B1 to B7. They used a grid search on a small model (B0) to find the optimal values for depth, width, and resolution coefficients (( \alpha ), ( \beta ), ( \gamma ) respectively), which were then used to scale up the baseline network. The compound scaling method can be summarized in the formula:

[ \begin{align*} \text{depth: } d &= \alpha^\phi \ \text{width: } w &= \beta^\phi \ \text{resolution: } r &= \gamma^\phi \end{align*} ]

Where ( \phi ) is the compound coefficient, and ( \alpha, \beta, \gamma ) are constants that can be determined by a small grid search such that ( \alpha \cdot \beta^2 \cdot \gamma^2 \approx 2 ), and ( \alpha \geq 1, \beta \geq 1, \gamma \geq 1 ).

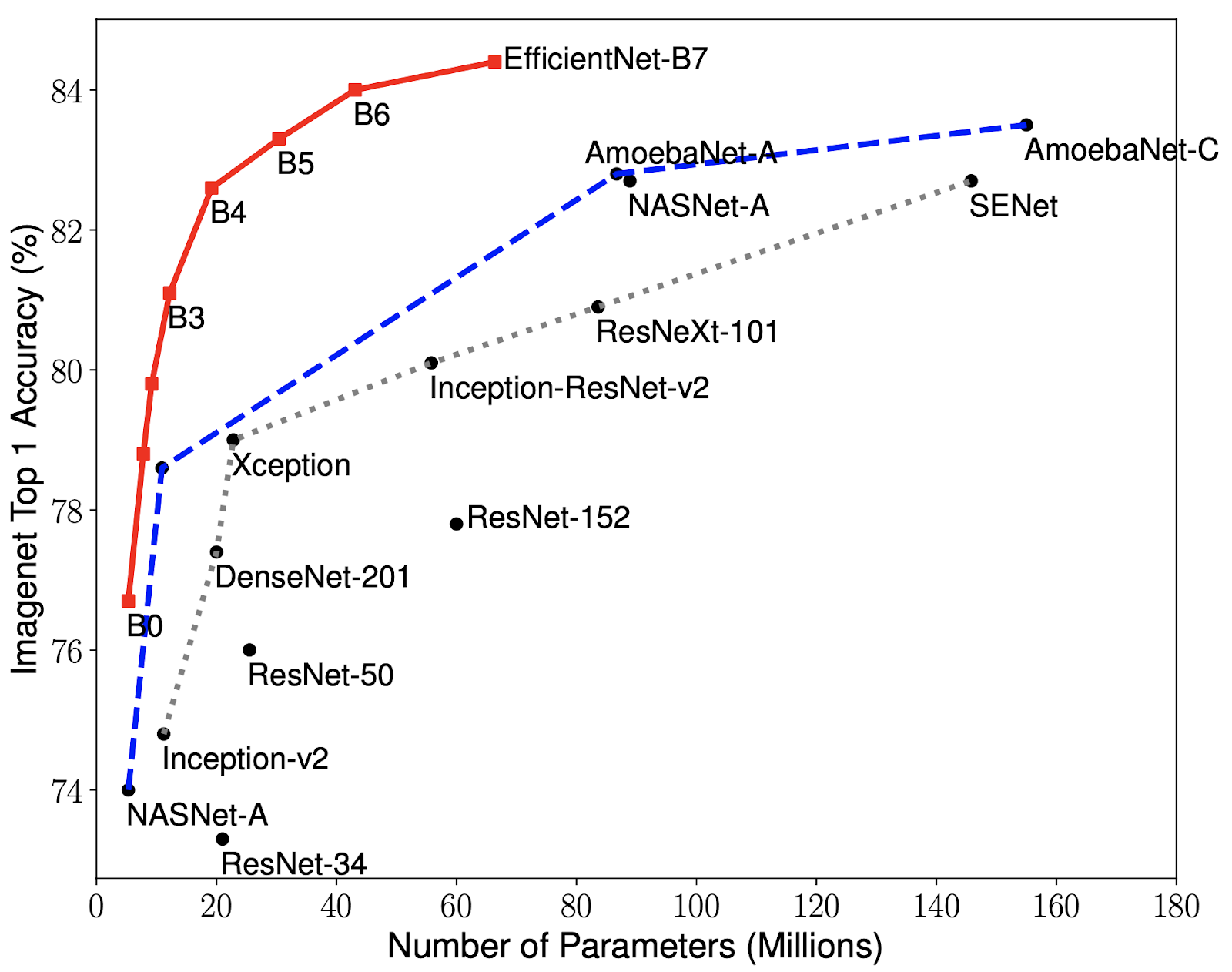

As a result of their scaling approach, EfficientNet models significantly outperformed previous state-of-the-art models on ImageNet while being much more efficient {hence the name}. The largest model, EfficientNet-B7, achieved state-of-the-art accuracy on ImageNet (84.4% top-1 and 97.1% top-5), while being 8.4x smaller and 6.1x faster on inference than the best existing ConvNet.

The EfficientNet paper has had significant implications for the field of deep learning. Its compound scaling method has provided a new, systematic way of scaling up models that is more effective than previous methods. Moreover, the efficiency of EfficientNet models has made them a popular choice for applications where computational resources are a constraint. The work has also influenced subsequent research, with many papers building on the ideas presented in EfficientNet.

Below is a representation of the EfficientNet models (B0 to B7) and their performance comparison with other models:

The image compares the traditional approaches of model scaling {scaling width, depth, or resolution} with EfficientNet's compound scaling. In the traditional approaches, one of the dimensions is scaled while the others are fixed. This is represented by three graphs where the scaled dimension increases along the x-axis, while the accuracy on ImageNet validation set is on the y-axis. The graphs show that each of these traditional scaling approaches improves model accuracy up to a point, after which accuracy plateaus or even decreases.

On the other hand, the compound scaling method of EfficientNet is represented by a 3D graph with width, depth, and resolution on the three axes. This shows that EfficientNet scales all three dimensions together, leading to better performance.

Tags: EfficientNet, 2019, Optimization